In this blog I want to discuss my take on testing strategy in continuous delivery (CD) process. I want to remove all the buzz words and just connect the dots as how we used to develop and deliver software before and how we want to do it now using CD. We will discuss a brief overview of benefits CD provides.

In this post I want to focus on testing because I feel it is the backbone for achieving the continuous delivery. Even if we build pipelines on any infrastructure, public or private clouds and in any language, without a good testing strategy we can never achieve CD. I will discuss a few testing tips that helped me implement continuous delivery in my recent project.

Traditional approach

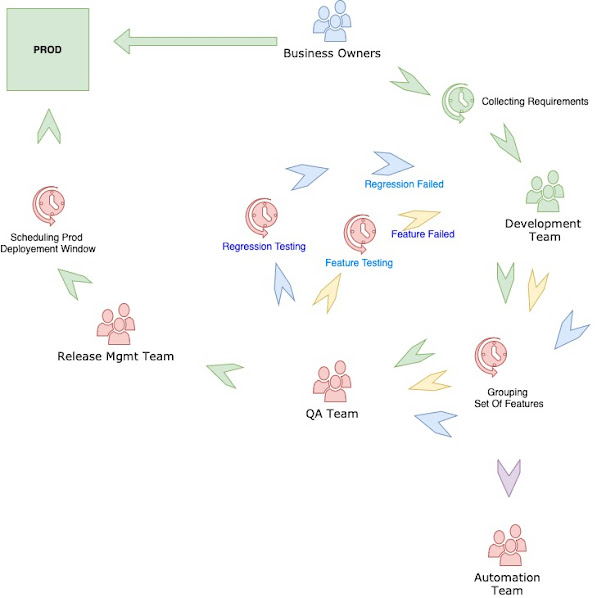

Let me start by giving a super high-level view of how we use to develop and deliver software. I am calling it a traditional approach in this context. Below diagram shows a typical traditional SDLC.

Testing in a traditional approach (anti-pattern):

Now that we understand how the SDLC use to be in traditional approach, let’s turn our focus on testing. I am showing an inverted pyramid, which now, is an anti-pattern. But it used to be common a few years back. We had a lot more Manual Tests followed by Functional/Acceptance Tests and then at the bottom were Unit Tests. You may ask “why unit test is at the bottom, shouldn’t we have more Unit Tests than the functional tests?”. The reason was the common notion that unit test only checks for a class or method thus does not provide value. But because Functional Tests test a complete feature, it provides more value.

I can think of a few drawbacks of this approach:

1. Too much time spent in repetitive manual work

2. QA Team might be shared by multiple teams causing more delays

3. Not one team is accountable but multiple teams are involved and hence more friction

4. Less focus on automation testing

5. Unit testing was ignored

Overall, the testing strategy was not geared towards faster delivery. It was also more error prone and delayed feedback.

Continuous delivery approach

The SDLC in case of continuous deployment model looks very different. If you look at the image below, this approach removes the wait time that are struck off. The Dev Team would now have the responsibility to automate the tests for the features they are working on. This also include coming up with scenarios where they might think their feature would fail. This seems like an additional work on Dev Team, and it is, and it should be accounted in their user stories. The Dev Team are now more concerned about how the feature would be tested and would most likely keep that in mind when designing it. Plus, Dev Team can write much better automation tests as they know the system and inner workings of the application.

All the things that I have struck off in the above image, gets replaced by an automated pipeline:

Testing in a continuous delivery approach

Unit Tests:

1. Testing one method and class at a time

2. Fastest feedback to developers

3. Consider it like Lego pieces, where each piece is validated and is foundation of the overall system

4. Unit tests runs with every commit and there should be some code coverage requirement as well. I generally use 85% as a minimum code coverage.

Functional Tests:

1. Testing a feature flow. It could cover the entire feature or impact of the feature on a domain if the application is developed using Domain Driven Design

2. Backbone of continuous deployment

3. Regression tests than can run on every commit

4. Make it part of pipeline and it should break the pipeline if it fails.

E2E Tests

1. The E2E tests should be very few.

2. These tests should run across all domains. These tests the very critical functionality only. Because they touch across domains with very less or no mocking, these tends to require higher maintenance costs.

Challenges of Functional Tests:

The functional tests also have some challenges. Here are a few that I have encountered the most:

1. Slow and Fragile

2. Simulating or Mocking Data

3. Separate Teams especially if you are keeping automation testing not part of development team

Overcoming functional testing challenges:

I used below tips to overcome some of the challenges and these helped me immensely in my projects.

1. Keep the data and tests together. We divided the system into smaller domains. Each domain was treated as a complete unit. The full feature was tested across this domain, mocking any external domains rest endpoints. We used wiremock, which provides an admin api. The tests were in cucumber. The tests were written in a way to set the mock responses at the start of the test and tear it down at the end.

2. Keep the tests independent. The data should be set and cleaned up in a way so the tests can run independently of each other.

3. Make the tests run in parallel

4. Run only the tests that might be related with the current commit. It is especially important in case of micro-services. If the modified service has no role to play in a feature (for example it belongs to a different domain), we can skip those tests. In addition to this, I generally run critical, or core feature tests irrespective of the commit impact zone.

5. The same development team should be responsible for writing the automated tests as well. This is very important, as Dev Team knows the most about the application, and also, they can design the app in a way that it is more testable.

6. Never use functional tests as a replacement of unit tests. Code coverage is not the goal here and it cannot cover all permutations. The goal should be test the feature functionality.